The team behind Ubuntu Linux is on a mission to redefine how users issue commands to software applications. In a blog post entitled “Introducing the HUD. Say hello to the future of the menu.“, Mark Shuttleworth explains the approach they are researching.

The concept of seamlessly integrating an “intelligent” command line into a modern graphical user interface has been around for quite a while in the form of utilities like LaunchBar, Alfred, or Quicksilver.

These programs allow you to not only find files, but also let you search application-specific data like address book contacts, music tracks, and browser bookmarks, and apply meaningful operations to the search results.

While not quite as powerful, the system-wide search features in Windows 7 and Mac OS X also transcend simple searches based on the files’ names or their content.

What is new about the Ubuntu HUD is its scope: Instead of operating on just files and data, the HUD can also find and execute commands from the application’s menu.

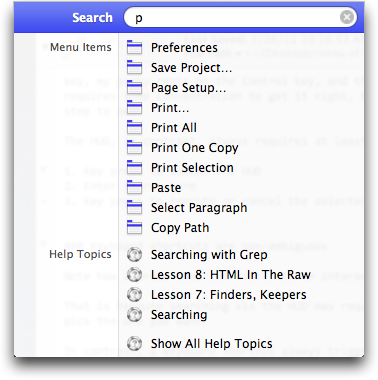

Apple uses a similar approach with a text field inside the Help menu, which lets you search the entire menu structure of the currently active application as well as the app’s help file.

The key difference between the two approaches is that Apple designed the menu search as an extension of the contextual Help system. As such it complements the application’s menu.

In contrast, the Ubuntu team considers their HUD interface to become a menu bar replacement.

> Say hello to the Head-Up Display, or HUD, which will ultimately replace menus in Unity applications.

As much as I am intrigued by the Ubuntu HUD as such, getting rid of the menu metaphor completely — including keyboard shortcuts — is not just unnecessarily drastic. It is short-sighted and misguided for a number of reasons.

For sheer speed, keyboard shortcuts are hard to beat

When graphical user interfaces were in their infancy, keyboard shortcuts were “invented” to allow users to more quickly invoke commonly used menu commands.

Instead of opening a menu and selecting one of its items with the mouse, you press a combination of a special “command” modifier key and one or more additional keys.1

The key combination for a command is displayed next to its menu item, so you are reminded of it every time you select the command from the menu via a pointing device.

Once you’ve memorized a keyboard shortcut, pressing it takes a fraction of second.

Compare this to the Undo operation in the “Introducing the HUD to Ubuntu” video (which you can watch embedded in Shuttleworth’s article or on YouTube): At 0:50 minutes into the video, you can observe how the user literally types “undo” into the HUD.

It takes the user about three seconds to issue that command!

This single interaction in the video provides sufficient proof that getting rid of keyboard shortcuts would be a seriously foolish move.

The HUD is modal, a keyboard short isn’t (quite)

To ensure that key presses reach their intended destination — either text entry into a document or text field, or a menu command –, the computer needs to be put into a temporary “command” mode when entering keyboard shortcuts.

The machine enters this mode when you press the Command key, and as soon as you let go of the key, it will leave the mode again. When you press the keys that make up a shortcut, Command is merely “first among equals”.

Once you’ve familiarized yourself with a shortcut, you will no longer “build it” key by key — “first, the Command key. Then the Shift key. And now press ‘S’, and there’s Save As…!”

Instead, you will “chord” the command, and it will feel like a single interaction step.

The HUD, in contrast, always requires at least three interaction steps:

- Pressing a key (or shortcut) to summon the HUD

- Pressing one or more keys to enter the search term

- Pressing a key to commit or cancel the selected command

Keyboard shortcuts are non-ambiguous and non-arbitrary

Depending on the search term you enter into the HUD, you may have to make a conscious selection from the list of search results that the HUD presents to you.

If you do have to make a selection beyond accepting or rejecting the “best match” that is automatically pre-selected for you, then that requires another interaction step, possibly consisting of pressing the up or down arrow keys multiple times.

In contrast, a keyboard shortcut always triggers one, and exactly one, command.

Command-P will always print the current document, but the top match that appears in the HUD when you enter a “P” may just as well be “Preferences”.

Speaking of the match between shortcut keys and commands: Shuttleworth claims that …

> Hotkeys are a sort of mental gymnastics, the HUD is a continuation of mental flow.

At least for basic shortcuts, that claim doesn’t hold water.

When assigning a key to a menu item, developers don’t make random picks. Instead, they carefully choose letters that have a meaningful correlation with the command. Like “Open”, “Save”, “Print”, “Close Window”, or “Quit”.

Note, by the way, how you would start the corresponding HUD search with the exact same letters that are used for the shortcuts!

In cases such as these, where you know exactly which command to execute, the HUD does not offer advantages in terms of learning, recalling, or finding commands.

Some shortcuts use less intuitive letters, like Command-Z for undo, or Command-[comma] for opening an application’s preferences (on the Mac). Once you use them often enough, though, even they will become second nature over time, especially when they are among those commands that are standardized across the entire platform and, thus, trigger the same function in all programs.

Shortcuts and menus leverage motor memory

When you issue a shortcut like Command-Control-F, you will likely not have to consciously place the three fingers on their respective target keys. After you’ve gained enough practice in “chording” the shortcut, your fingers will move into place automatically.

The same holds true when selecting certain commands with the mouse. E.g., on a Mac, the “About [this application]” command’s position in the menu structure is standardized across the operating system.

Therefore, you know (from experience) that your first mouse pointer destination is “somewhere up there in the top-left corner, and it’s the menu directly to the right of that Apple thingy.” Your second destination is the first thing right underneath the menu’s title label.

Even though their positions aren’t standardized as strictly, many other often-used commands — for creating, opening, and saving files, for example — are found in similar locations in every application on the platform.2

Combined with motor memory, selecting a menu item this way can be surprisingly efficient.

Driving around with the mouse? All day!

Here is another quote from Shuttleworth’s article:

> So while there are modes of interaction where it’s nice to sit back and drive around with the mouse, we observe people staying more engaged and more focused on their task when they can keep their hands on the keyboard all the time.

For someone who mainly works with text, that may very well be true.

Watch someone work on non-text data, however, and you’ll observe that, for these people, keeping one hand on the mouse (or graphics tablet, etc.) and another on the keyboard is their standard “mode of interaction”.

This approach is common for any work that involves a continuous mixture of entering keyboard commands and moving objects on the screen, regardless of whether these objects are shapes on a canvas in a graphics editor, audio and MIDI snippets in a track arrange window in a music recording program, or images in a photo management app.

In such cases, you manipulate the on-screen elements with one hand on your pointing device of choice, while using the other hand to enter keyboard shortcuts for copying, pasting, etc., or pressing modifier keys for changing the cursor behavior from dragging to rotation, for example.

Consequently, assuming that keeping both hands on the keyboard at all times is the optimum solution for every type of user is pure nonsense.

For anyone who spends the better part of their working day inside applications like Photoshop, Pro Tools, or Aperture, it would be a nightmare to be forced to use a command line instead of being able to concurrently combine keyboard shortcuts with the extensive use of a pointing device.

The Ubuntu HUD’s content isn’t optimized for its use

When you watch the demo video, keep a close eye on the search results in the HUD. In its current implementation, the HUD provides just a different view on the application’s menu structure. Its output is not optimized for use in this UI control.

For example, at 1:55, the user enters “alic” into the HUD to search for “Alice in Wonderland”. The matching result is listed as “Tools > Bookmarks > Alice in Wonderland”.

What average computer user thinks of an audio track as a bookmark? And how is a bookmark related to tools?

In cases as this, displaying the full menu structure that encloses the “Alice in Wonderland” track, is not adding any useful information. In fact, it even makes decoding that search match confusing.

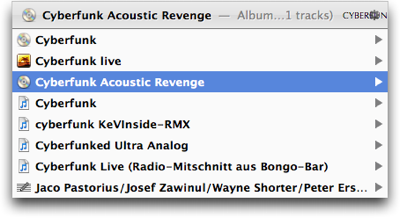

Compare that to how LaunchBar displays a music track: All you see is the track name.

The search results’ types are communicated by simple, reasonably intuitive icons. Unlike the Ubuntu HUD, LaunchBar hides the found items’ meaningless taxonomical overhead from the user.

Using the left and right arrow keys, you can further explore the results list. Moving “right” from the “Cyberfunk Acoustic Revenge” album, you get to see all tracks on that album.

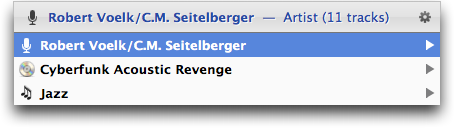

Digging into the selected track, your are presented with the track’s artist, the album that it’s on, and its genre.

The core difference between the Ubuntu HUD (as it is working now) and LaunchBar is that the former is based on a rigid menu structure, whereas the latter searches arbitrary data, and presents it in a way that is meaningful and highly accessible to the user.

Instead of mapping a flattened menu tree structure into a linear text list as demonstrated in the video, the HUD should display its information from a task perspective by:

-

displaying contextual information when it is useful ( “History > Planet Ubuntu” ⇒ “Browser History > Planet Ubuntu”),

-

reducing redundancy (“Bookmarks > Bookmark This Page” ⇒ “Bookmark This Page” or “Edit > Undo Fuzzy Glow” = “Undo Fuzzy Glow”), and

- speaking the user’s language (“Tools > Bookmarks > Alice in Wonderland” ⇒ “Play Music: Alice in Wonderland”).

In with the new, but do keep the old!

I applaud Ubuntu’s efforts to come up with new ways of interacting with “The Machine”.

The HUD has the potential to combine the best from advanced search technologies like Apple’s Spotlight, smart command line interfaces like LaunchBar or Quicksilver, and new ways to access an application’s menu commands into a single, extremely powerful, yet usable interface element.

Shuttleworth is probably right when he claims that:

> the HUD is faster than mousing through a menu, and easier to use than hotkeys since you just have to know what you want, not remember a specific key combination.

Conversely, though, the HUD isn’t easier to use than a menu nor is it faster than hotkeys.

To use the HUD effectively, you need to have an understanding of which commands it understands, whereas you can browse (and sometimes search) a menu for the commands and functions it makes available.

And while you have to memorize a hotkey in order to you use it effectively, it is much faster to access than entering a command via the HUD.

Therefore, I hope the powers that be at Ubuntu will revise the decision to completely tear out the support for classic menus from their operating system.

Instead, they should let your users decide whether the menu bar is displayed in its standard on-screen location, whether it’s stashed away in the panel, or whether it should be fully hidden from the user’s eye.

Sometimes, new ideas aren’t good enough to fully replace the old ones. But, more often than not, they’re just right to complement and extend them.

-

The Mac has a dedicated Command key, but other operating systems’ use of the Control key works just as well. For brevity and convenience, I will use the term “Command key” in this article. ↩

-

Unless, of course, an app’s developer lacks the required expertise of properly designing a native application for a specific operating system or window manager. ↩